Visualise pointing your phone at a complex board g...

news-extra-space

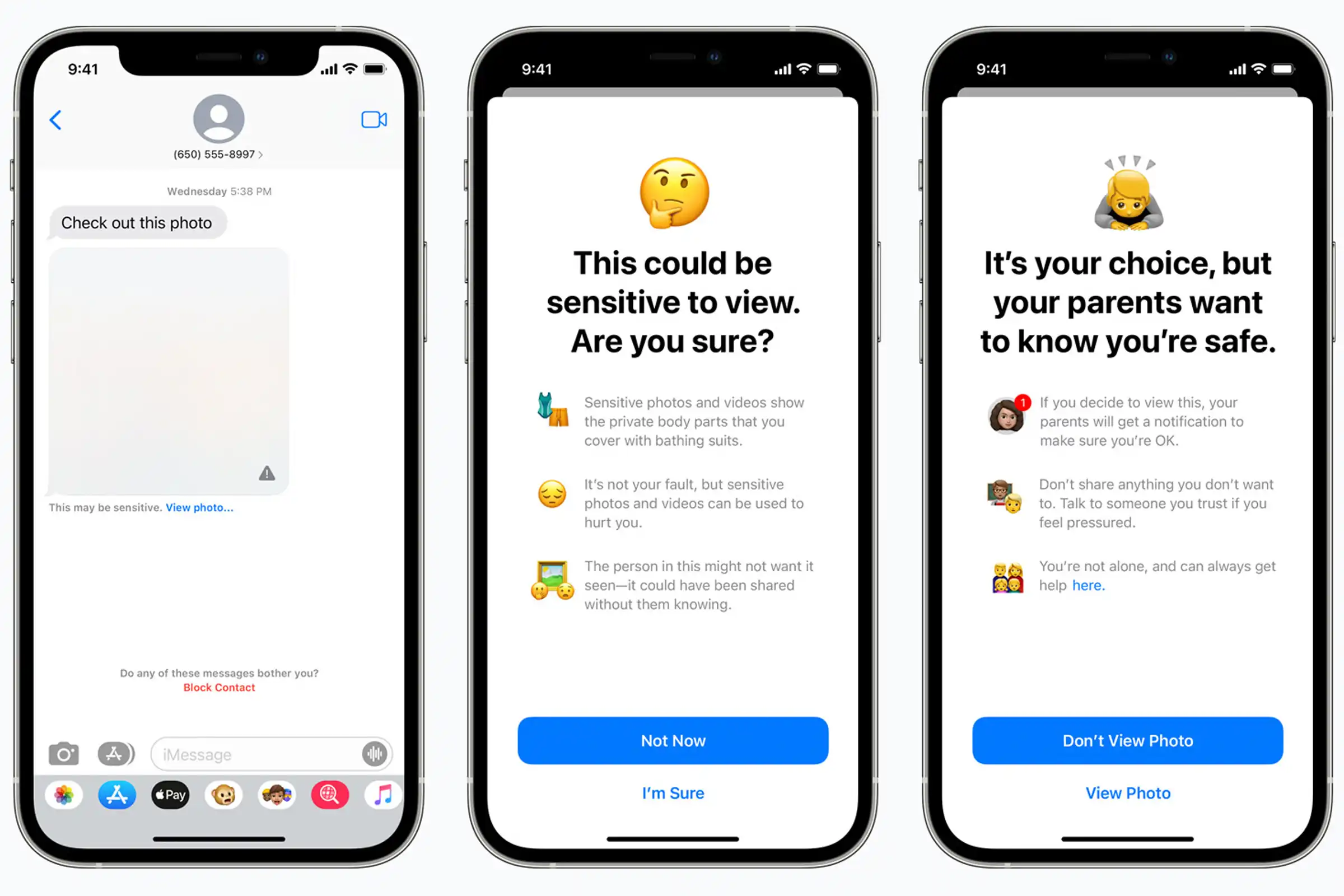

It's important to note that the function drew harsh criticism even before Apple released it. Security experts and even some tech company workers issued warnings against it.

Also Read: Apple iOS 16.1.1 Rolls Out, Available To Download: Update

The digital juggernaut with headquarters in Cupertino delayed its launch in response to user complaints. Prior to the end of 2021, the company first intends to offer the detecting feature.

Given that, the tech giant remained silent about it until today, almost a year later.

It's important to note that the function drew harsh criticism even before Apple released it. Security experts and even some tech company workers issued warnings against it.

Also Read: Apple iOS 16.1.1 Rolls Out, Available To Download: Update

The digital juggernaut with headquarters in Cupertino delayed its launch in response to user complaints. Prior to the end of 2021, the company first intends to offer the detecting feature.

Given that, the tech giant remained silent about it until today, almost a year later.

Leave a Reply