DuckAssist offers a Wikipedia summary powered by artificial intelligence

March 09, 2023 By Monica Green

(Image Credit Google)

Image Credit: Seosandwitch

To keep up with the rush to incorporate generative AI into search, DuckDuckGo on Wednesday unveiled

DuckAssist, an AI-powered factual summary service based on Anthropic and OpenAI technology. Users of DuckDuckGo's browser extensions and browsing apps can access them for free right now as part of a large beta test. DuckAssist may make things up because it is powered by an AI model, the company acknowledges, but they anticipate this happening infrequently.

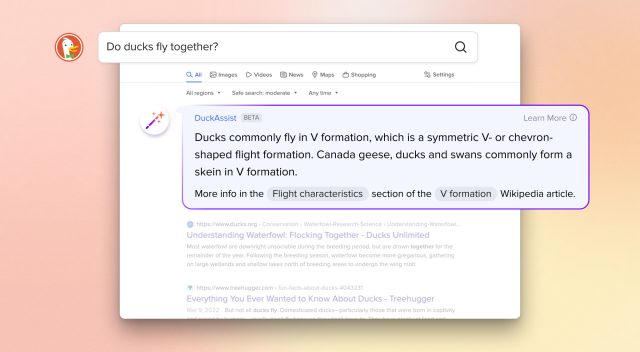

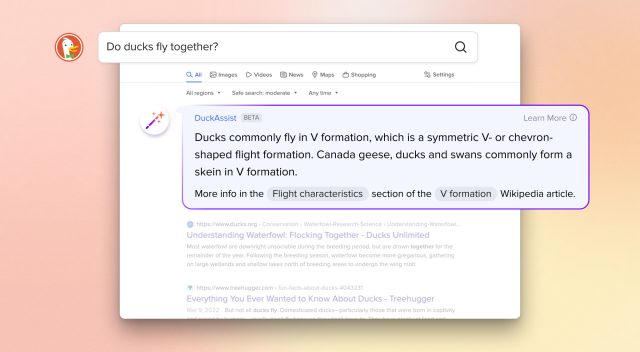

When a user of DuckDuckGo searches for an answer that can be found in Wikipedia, DuckAssist might show up and produce a concise summary of the results using AI natural language technology, along with a list of the sources. DuckDuckGo displays the summary in a separate box above the regular search results. This is all it can do.

[caption id="" align="alignnone" width="640"]

demonstration screenshot of DuckAssist[/caption]

A feature that saves users from having to sift through web search results to find quick information on subjects like news, maps and weather is described by the company as a new version of "Instant Answer" and is known as DuckAssist. The Instant Answer results are displayed above the standard list of websites by the search engine.

Although OpenAI API seems likely, DuckDuckGo does not specify which large language model (LLM) or models it uses to produce DuckAssist.

The company emphasizes that DuckAssist is "anonymous" and that it does not share search or browsing history with anyone because privacy is DuckDuckGo's main selling point. Additionally, Weinberg notes, “We maintain your search and browsing history's anonymity to our search content partners, in this case, OpenAI and Anthropic, used for summarizing the Wikipedia sentences we identify.”

What about the Hallucination factor?

What about the Hallucination factor?

As "hallucinations" is a term of art used by AI researchers, LLMs have the propensity to produce convincingly false results. Hallucinations can be difficult to recognize unless you are familiar with the material being discussed, and they are caused in part by the fact that OpenAI's GPT-style LLMs do not distinguish between fact and fiction in their datasets. Furthermore, the models may draw erroneous conclusions from reliable data.

To prevent hallucinations, DuckDuckGo heavily relies on Wikipedia as a source: "by asking DuckAssist to only summarize information from Wikipedia and related sources," "the probability that it will "hallucinate"—that is, just make something up—is greatly diminished. " Weinberg cites.

Also Read: Samsung Foldable Display Laptop Might Release Next Year

Using a reliable source of data can help reduce errors caused by inaccurate data in the AI's dataset, but it might not help reduce false inferences. Additionally, DuckDuckGo places the onus of fact-checking on the user by providing a source link beneath the AI-generated result that can be used to assess its accuracy. However, it won't be perfect, and CEO Weinberg acknowledges this: "However, DuckAssist won't always generate accurate answers. We fully anticipate that it will make errors. "

Before firms and customers decide what level of hallucination is acceptable in an AI-powered product that is intended to accurately inform people, it may take some time and extensive use as more companies implement LLM technology that is easily misled.

demonstration screenshot of DuckAssist[/caption]

A feature that saves users from having to sift through web search results to find quick information on subjects like news, maps and weather is described by the company as a new version of "Instant Answer" and is known as DuckAssist. The Instant Answer results are displayed above the standard list of websites by the search engine.

Although OpenAI API seems likely, DuckDuckGo does not specify which large language model (LLM) or models it uses to produce DuckAssist.

The company emphasizes that DuckAssist is "anonymous" and that it does not share search or browsing history with anyone because privacy is DuckDuckGo's main selling point. Additionally, Weinberg notes, “We maintain your search and browsing history's anonymity to our search content partners, in this case, OpenAI and Anthropic, used for summarizing the Wikipedia sentences we identify.”

demonstration screenshot of DuckAssist[/caption]

A feature that saves users from having to sift through web search results to find quick information on subjects like news, maps and weather is described by the company as a new version of "Instant Answer" and is known as DuckAssist. The Instant Answer results are displayed above the standard list of websites by the search engine.

Although OpenAI API seems likely, DuckDuckGo does not specify which large language model (LLM) or models it uses to produce DuckAssist.

The company emphasizes that DuckAssist is "anonymous" and that it does not share search or browsing history with anyone because privacy is DuckDuckGo's main selling point. Additionally, Weinberg notes, “We maintain your search and browsing history's anonymity to our search content partners, in this case, OpenAI and Anthropic, used for summarizing the Wikipedia sentences we identify.”