Elon Musk revealed his newest project, XMail, an e...

news-extra-space

Image - telecom[/caption]

Encharge AI was created by Verma, Echere Iroaga, and Kailash Gopalakrishnan. Verma is the director of the Keller Center for Innovation in Engineering Education at Princeton University, whereas Gopalakrishnan spent more than 18 years as a fellow there.

Iroaga, on the other hand, joined the company after serving as a vice president and general manager at the semiconductor maker Macom.

Verma and associates from the University of Illinois at Urbana-Champaign received government funding to launch EnCharge in 2017. Verma was in charge of a $8.3 million DARPA Electronics Resurgence Initiative project that investigated cutting-edge non-volatile memory technologies.

Since non-volatile memory can retain data even when the power is off, it has the potential to be more energy-efficient than the traditional "volatile" memory found in modern computers.

[caption id="attachment_72586" align="aligncenter" width="1200"]

Image - telecom[/caption]

Encharge AI was created by Verma, Echere Iroaga, and Kailash Gopalakrishnan. Verma is the director of the Keller Center for Innovation in Engineering Education at Princeton University, whereas Gopalakrishnan spent more than 18 years as a fellow there.

Iroaga, on the other hand, joined the company after serving as a vice president and general manager at the semiconductor maker Macom.

Verma and associates from the University of Illinois at Urbana-Champaign received government funding to launch EnCharge in 2017. Verma was in charge of a $8.3 million DARPA Electronics Resurgence Initiative project that investigated cutting-edge non-volatile memory technologies.

Since non-volatile memory can retain data even when the power is off, it has the potential to be more energy-efficient than the traditional "volatile" memory found in modern computers.

[caption id="attachment_72586" align="aligncenter" width="1200"] techcrunch[/caption]

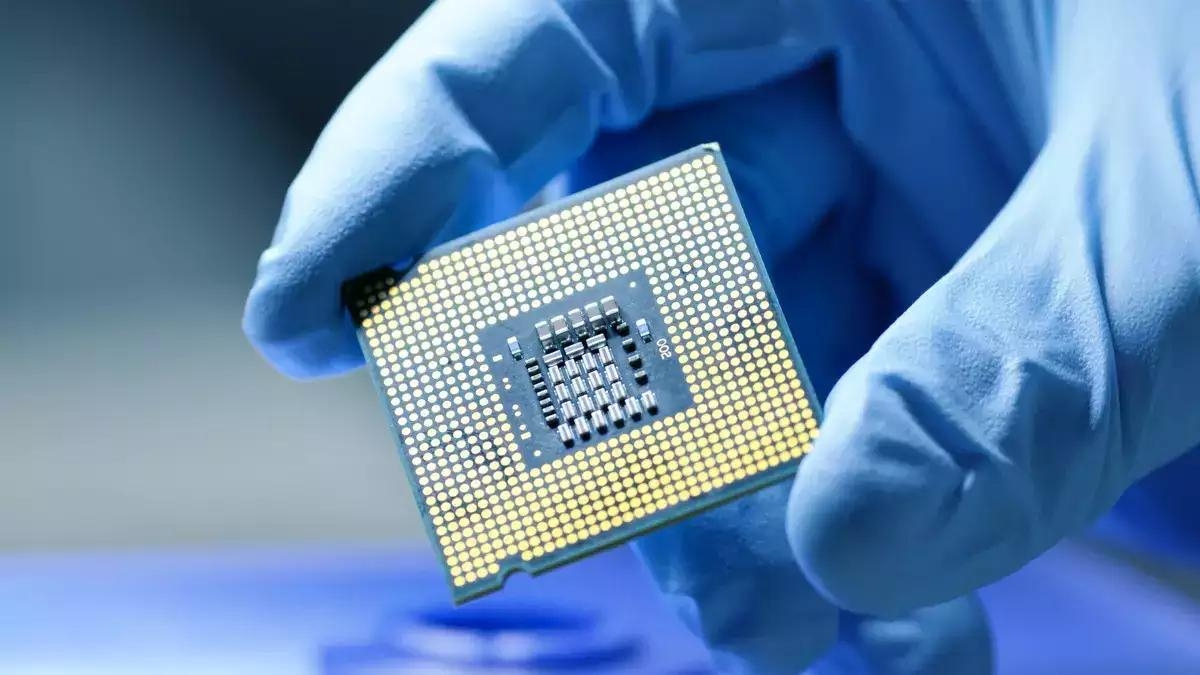

Non-volatile memory commonly takes the form of flash memory and magnetic storage devices like hard drives and floppy discs.

Verma's work on in-memory processing for machine learning calculations received additional assistance from DARPA. "In-memory" here refers to performing these calculations directly in RAM rather than via storage media, which introduces a latency.

EnCharge was established to supply hardware in the typical PCIe form size in order to commercialise Verma's discoveries.

Verma claimed that by using in-memory processing and less power than conventional computer processors, EnCharge's special plug-in technology may speed up artificial intelligence (AI) applications on servers and "network edge" devices.

techcrunch[/caption]

Non-volatile memory commonly takes the form of flash memory and magnetic storage devices like hard drives and floppy discs.

Verma's work on in-memory processing for machine learning calculations received additional assistance from DARPA. "In-memory" here refers to performing these calculations directly in RAM rather than via storage media, which introduces a latency.

EnCharge was established to supply hardware in the typical PCIe form size in order to commercialise Verma's discoveries.

Verma claimed that by using in-memory processing and less power than conventional computer processors, EnCharge's special plug-in technology may speed up artificial intelligence (AI) applications on servers and "network edge" devices.

Leave a Reply