In the world of handheld gaming consoles, a new pl...

news-extra-space

The blog post said, "An NPMP is a general-purpose motor control module that translates short-horizon motor intentions to low-level control signals, and it's trained offline or via RL by imitating motion capture (MoCap) data, recorded with trackers on humans or animals performing motions of interest."

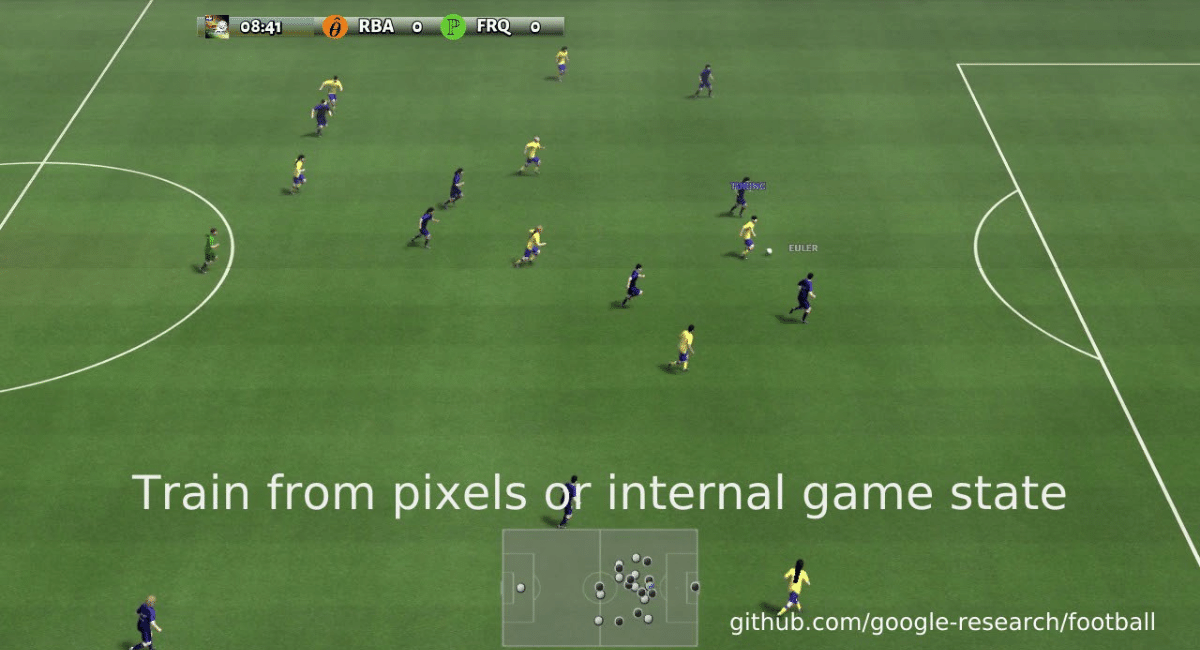

The team's research paper states, "We optimized teams of agents to play simulated football via reinforcement learning, constraining the solution space to that of plausible movements learned using human motion capture data."

The blog post said, "An NPMP is a general-purpose motor control module that translates short-horizon motor intentions to low-level control signals, and it's trained offline or via RL by imitating motion capture (MoCap) data, recorded with trackers on humans or animals performing motions of interest."

The team's research paper states, "We optimized teams of agents to play simulated football via reinforcement learning, constraining the solution space to that of plausible movements learned using human motion capture data."

Robot engineers have made diligent efforts to design robots capable of playing soccer for many years. Various tech groups have competed while working hard to create the best robot players. Their continued efforts eventually created the RoboCup, having multiple leagues in the real and simulated worlds. Here, researchers used artificial intelligence programming and learning networks at a new level, training simulated robots on the methods of playing soccer without the game rules.

The latest AI approach focuses on the idea of allowing simulated soccer players to learn to play the game, watching other players, just like humans. They can even start from scratch and gradually progress to become expert players.

The virtual players had to initially learn how to walk, run, and kick the ball across the virtual field. Then, the researchers showed the AI systems a video of real-world soccer players at each new grade, allowing them to learn the game's basics, impersonating professional athletes' moves when involved in high-level sporting events.

When trained in solo gaming, the robots were allowed to contend with single virtual players, adding more players as their skills developed further. The researchers initially engaged smaller teams like two-on-two against each other, and later, as the AI players developed expertise, they added more virtual players to train them in the entire sport.

Robot engineers have made diligent efforts to design robots capable of playing soccer for many years. Various tech groups have competed while working hard to create the best robot players. Their continued efforts eventually created the RoboCup, having multiple leagues in the real and simulated worlds. Here, researchers used artificial intelligence programming and learning networks at a new level, training simulated robots on the methods of playing soccer without the game rules.

The latest AI approach focuses on the idea of allowing simulated soccer players to learn to play the game, watching other players, just like humans. They can even start from scratch and gradually progress to become expert players.

The virtual players had to initially learn how to walk, run, and kick the ball across the virtual field. Then, the researchers showed the AI systems a video of real-world soccer players at each new grade, allowing them to learn the game's basics, impersonating professional athletes' moves when involved in high-level sporting events.

When trained in solo gaming, the robots were allowed to contend with single virtual players, adding more players as their skills developed further. The researchers initially engaged smaller teams like two-on-two against each other, and later, as the AI players developed expertise, they added more virtual players to train them in the entire sport.

As a result, the researchers gained impressive outputs. Although the game was virtual, it looked more realistic as the players made decisive moves to achieve their goals. Moreover, the researchers accepted that they simplified the game without any fouls. For instance, an invisible boundary surrounding the pitch prevents the straying of balls outside the bounds.

Researchers have also noted that, training virtual players is time-consuming, which might be a setback when they introduce the technology to real-world robots.

As a result, the researchers gained impressive outputs. Although the game was virtual, it looked more realistic as the players made decisive moves to achieve their goals. Moreover, the researchers accepted that they simplified the game without any fouls. For instance, an invisible boundary surrounding the pitch prevents the straying of balls outside the bounds.

Researchers have also noted that, training virtual players is time-consuming, which might be a setback when they introduce the technology to real-world robots.

Leave a Reply