(Image credit- Smartprix) Designed to reduce en...

news-extra-space

On the one hand, OCT, or Optical Coherence Tomography, is a machine commonly found in an ophthalmologist's office. It is the optical analog of ultrasound, sending light waves into objects and measuring how long they take to return. On the other hand, the FMCW LiDAR sends out a laser beam that continuously shifts between different frequencies. As a result, it can distinguish between a specific frequency pattern and any other light source, working in all kinds of lighting conditions with high speed, making it more accurate than current LiDAR systems.

Qian further added that "30 years ago, nobody knew autonomous cars or robots would be a thing, so the technology focused on tissue imaging. Now, to make it useful for these emerging fields, we need to trade in its extremely high-resolution capabilities for more distance and speed."

In a recent paper published on March 29, 2022, in the journal Nature Communications, Duke researchers demonstrated how a few tricks learned from the OCT research can improve the previous FMCW LiDAR data throughput by 25 times while still achieving submillimeter depth accuracy.

On the one hand, OCT, or Optical Coherence Tomography, is a machine commonly found in an ophthalmologist's office. It is the optical analog of ultrasound, sending light waves into objects and measuring how long they take to return. On the other hand, the FMCW LiDAR sends out a laser beam that continuously shifts between different frequencies. As a result, it can distinguish between a specific frequency pattern and any other light source, working in all kinds of lighting conditions with high speed, making it more accurate than current LiDAR systems.

Qian further added that "30 years ago, nobody knew autonomous cars or robots would be a thing, so the technology focused on tissue imaging. Now, to make it useful for these emerging fields, we need to trade in its extremely high-resolution capabilities for more distance and speed."

In a recent paper published on March 29, 2022, in the journal Nature Communications, Duke researchers demonstrated how a few tricks learned from the OCT research can improve the previous FMCW LiDAR data throughput by 25 times while still achieving submillimeter depth accuracy.

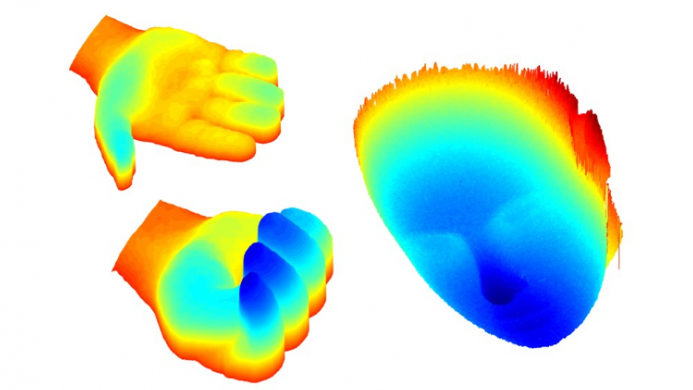

The Duke team used diffraction grading that works like a prism instead of rotating mirrors previously employed by LiDAR systems. The former covered a wider area quickly without losing depth and location accuracy. Also, the team narrowed the range of frequencies used by OCT for a much greater imaging range and speed than traditional LiDAR.

Hence, the new FMCW LiDAR is faster and more accurate, an eye imaging technology to help robots see better by efficiently capturing the details of a moving human body in real-time. Professor Izzat remarked, "These are exactly the capabilities needed for robots to see and interact with humans safely or even replace avatars with live 3D video in augmented reality." He further revealed, "Our vision is to develop a new generation of LiDAR-based 3D cameras which are fast and capable enough to enable integration of 3D vision into all sorts of products". Izzat said that "The world around is 3D, so if we want robots and other automated systems to interact with us naturally and safely, they need to be able to see us as well as we see them."

The Duke team used diffraction grading that works like a prism instead of rotating mirrors previously employed by LiDAR systems. The former covered a wider area quickly without losing depth and location accuracy. Also, the team narrowed the range of frequencies used by OCT for a much greater imaging range and speed than traditional LiDAR.

Hence, the new FMCW LiDAR is faster and more accurate, an eye imaging technology to help robots see better by efficiently capturing the details of a moving human body in real-time. Professor Izzat remarked, "These are exactly the capabilities needed for robots to see and interact with humans safely or even replace avatars with live 3D video in augmented reality." He further revealed, "Our vision is to develop a new generation of LiDAR-based 3D cameras which are fast and capable enough to enable integration of 3D vision into all sorts of products". Izzat said that "The world around is 3D, so if we want robots and other automated systems to interact with us naturally and safely, they need to be able to see us as well as we see them."

Leave a Reply